Data is just a sketch of reality, not reality itself.

I haven’t found anyone talking about this fundamental gap. Data is the oil of the modern approach to developing AI and Machine Learning. Therefore, we build AI's “understanding” of the world on abstractions, not the rich details of actual life.

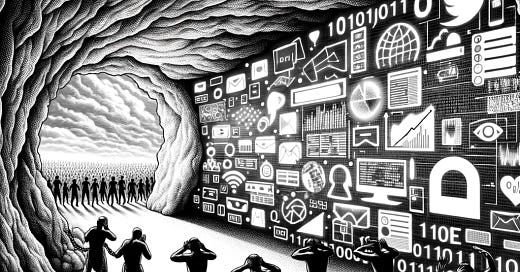

In other words, we've trained our machines to see patterns in the shadows of reality. So, they learn to predict the future from these abstractions. This begs the question: are we teaching them to navigate the world as it is or merely as we've coded it to be?

It is crucial to recognize that the way computers and humans learn and understand the world is fundamentally different. This difference is not a mere technicality but a profound insight that guides how we should design and use AI systems.

It is often said that the new wave of GenAI is going to replace the knowledge workers. And there’s some truth to that. But does AI produce “knowledge,” as they say?

I stressed this point in my book.

“AI systems and machine learning can only “see” the world through the data we feed to them. So, they see an extremely limited version of the world. Data will always be a limitation of AI. ML programs can then automatically organize data into information, which can provide knowledge, but it is up to us humans to critically evaluate how information was generated and how to use it—wisdom is a human’s objective. We cannot leave the task of deriving wisdom to a machine.”

Excerpt From The Ethics of AI, Alberto Chierici. https://itunes.apple.com/WebObjects/MZStore.woa/wa/viewBook?id=0

Data is a simplification of reality

At its core, data is our attempt to capture and quantify the world around us. Data is a simplification or abstraction of reality. Take color, for instance. To a physicist, color is described by its wavelength—a quantifiable measure. A designer, however, might think in terms of RGB codes. Both are valid yet simplified representations of a much richer human experience of color. This illustrates a fundamental truth: data, by nature, reduces the complexity of reality to something more manageable, but in doing so, it leaves out nuances and dimensions that might be critical for understanding the full picture.

While AI systems can process and analyze data at an unprecedented scale and speed, they're ultimately limited by the nature of their input. If data is a simplification of reality, then AI's “understanding” of the world is inherently constrained. It can't appreciate the emotional resonance of a green field in the same way a human can, nor can it understand the myriad ways in which cultural context shapes our interpretation of information.

AI, no matter how advanced, operates within the confines of this simplified reality. It learns from and makes decisions based on data that inherently lacks the full spectrum of human perception, experience, and understanding of the world. This limitation is not just a technical hurdle to be overcome with more data or better algorithms; it's a fundamental characteristic of how AI interacts with the world.

Ignoring this limitation can lead to misunderstandings and misplaced expectations about what AI can achieve. We've seen time and again how AI systems can falter when faced with situations that fall outside the narrow confines of their training data. From misinterpretation of nuances in language to misrecognition of images outside their datasets, these failures underscore the importance of acknowledging and designing for AI's inherent limitations.

But there’s opportunity in limitation

Yet, within this limitation lies opportunity. By accepting that AI cannot replicate the full depth of human experience, we can focus on leveraging AI in ways that complement rather than attempt to replace human intelligence–sorry, let’s stop using inappropriately the word intelligence–to replace human judgment.

One of the most promising opportunities is in augmenting human decision-making. AI can process and analyze data at scales and speeds unattainable to humans, providing insights that can inform and enhance human judgment. This collaborative approach can lead to better outcomes in fields ranging from medicine to urban planning, where the complexity of human experience is integral to decision-making.

A path forward

Our challenge is to keep these limitations in mind not only when developing AI systems but also when using them. This means investing in research, initiatives, and education programs that explore not just how AI can be made more powerful but also how we can make its creators and users more aware of its constraints.

In embracing the limitations of AI, we open the door to a more nuanced and realistic understanding of what AI can and cannot do. This understanding is critical for developing and using AI technologies that are not only effective but also ethical and socially responsible. As we continue to explore the vast potential of AI, let's do so with a clear-eyed recognition of its limitations, using them not as barriers to innovation but as guides to more meaningful and human-centric applications.

How do you see the role of data shaping the future of AI in your field? Share your thoughts and experiences on how we can balance the strengths of AI with the complexities of the real world.